Have you ever wondered what happens when you have a very

motivated team that is very wrong for a given job? The team works extremely

hard, slogging hours and clocking tons of time, killing themselves over

weekends and finally comes up dissatisfied with what they have (non)achieved. In

other words, square peg in round hole. Sounds familiar? Read on.

This case study is for service engineering team in any

company of 1000 people or more. In the industry, service engineering team

typically sets up all the framework to take the developer’s code to production.

This involves setting up robust CI/CD (Continuous Integration/Continuous

Delivery), monitoring, automating some parts of Dev team provided engineering

and QA tests, post-deployment smoke tests and full blown monitoring framework

for all alerts around the code.

SE and SRE Teams

Most organizations have SE and SRE teams in a combined single team. They may chose to call it SE or SRE. But make no mistake, this team has two different and very conspicuous flavors – engineering and

operations. Like I said earlier, most teams have both the flavors built into one single team. That

implies that the same team will have folks with great engineering competence

and those with operations bias as well. However, in some companies, especially

the larger ones, the two flavors may be two very different teams working

separately under different leadership for common goals as stated above.

In some

places, the engineering focused team is called DevOps and the operations team

is called SRE. Yet other companies name the engineering focused team as Service

Engineering team and operations team as just operations team.

In Yahoo, we have engineering biased team as Service

Engineering team (SE team) and operations focused team as Service Reliability

Engineering team (SRE team).

When the teams are created ab initio, the work is segregated and defined for each team. The

two teams are also seeded differently – engineering focused team will have more

people who can code, understand the code, get into innards of the code base and

file bugs when we hit issues due to buggy code. They almost tell the developers

“here is where your code is throwing an exception, please fix this part”. So

they “can read” and “understand” the code but since they do not “own” the code,

they hand off the bug resolution to developers.

Then we have the SRE team which is our first and second line

of defense and mostly attends to all the alerts, provides first level

investigation and triaging and either resolves them or escalates them to

service engineering team. In a very matured SRE team, we expect 85-90% alerts

being handled and resolved by SRE team. The 15-10% alerts that are escalated to

SE team are mostly resolved by SEs. You can expect 1-2% of those alerts being

escalated to development team.

If you look at the work, SRE work is totally interrupt

driven, SE work is partially interrupt driven and large part is planned work.

The developer’s work, on the other hand, should be largely planned so he or she can totally focus

on the new features, new products and enhancements etc.

It is possible that over a period, the teams may morph into

something better – at that point, we say “oh, this team has really matured into

a fantastic SRE or SE or development team”. That is mostly possible if the

teams are doing the type of work that they have been designed to do and in the

manner (interrupt or plan driven) they have been conceptualized to do. So this is

good scenario, eh?

When things start

going awry….

What happens when the scenario doesn’t turn out to be as

good as we wanted it to be or as favorable to each team as we would have wished

for? Well, then we have a challenge….

If we are not continuously monitoring our teams for type of

skill sets that seed these teams, the type of work that falls in their laps,

it is very possible that the fiber of the team(s) may undergo a mutation –

typically, for worse.

Imagine a scenario, we have attrition due to any number

of reasons in SRE team. It could be leadership or lack of it, lack of good management, gaps

in people’s expectation, company not doing well, work load being pure killer…any

number of reasons. And as a organization, we fail to see this coming, even

after it happens, we do not backfill attrition immediately or fast enough, the

workload on remaining people will continue to increase since attrition of

people doesn’t necessarily translate into reduction of workload. So, the

remaining workforce comes under resources crunch and work overload. This then

starts a downward spiral that if not arrested well and fast enough can pretty

much cause annihilation of SRE team. Once SRE team reduces without proportional reduction in the workload, we start spilling workload to SE team. SE team now suddenly

discovers, much to its angst and disappointment that it is the de-facto team doing SRE

work while expectation around SE work have not diminished at all. So SE team

starts focusing on totally operational work and bends backward to make the Site

stay up.

During this time, SE team has also changed their work

routine as follows so they can accommodate the operations workload that has

been thrust on them:-

- Stops going to development team’s daily scrum

meetings since SE was up fighting operations and incident late last night,

during weekends and long weekends

- Doesn’t have time to do code review with dev

team

- Doesn’t have time to build monitoring new

feature that got pushed last evening through CI/CD pipeline

- Backlog of the SE related work starts building

up

Not many people realize this but during this time, the SE team

also moved away from being largely driven by planned work through Kanban/Scrum

to interrupt driven work.

Slowly but steadily the SE team becomes the new SRE team.

Management and leadership don’t really mind it – they cut the cost down in operating

expenses by dismantling a full team that was called SRE team and in their minds

and words, they have made the SE team very “efficient”.

The management is so focused on dollars that it misses the deep,

dense forest for few “shinning” trees.

The whole change takes place over at least a year, it can’t

happen over few months.

The management is applauded and rewarded for cost cutting

and the “success stories” are told and exchanged with other teams. No one yet

understands the deeper damage this cost cutting and change has done to the SE

team. By the time people and management will realize this, the current

leadership of management would have long moved to different role, different

company, and different team to continue the good work there.

Now let us bring our focus back to poor SE team that has

been forced to morph into an SRE team. There are some very brilliant engineers

in the SE team who are not happy with the current state of affairs and are

waiting for management to put them out of their misery by recreating the SRE

team. When they realize that management is not even thinking on those lines –

of recreating SRE team, reinvesting in the SRE people - they make their mind and jump ship. Jump ship to different team, different company, anywhere

but their current team.

Now the next phase kicks in, the SE team starts seeing the

same attrition that SRE team saw – reasons may be the same or different – but good

and brilliant team members are first to leave. The immediate management of SE

team is suddenly running around like headless chicken, trying to do firefighting

by getting enough resources so Site can be kept up. In their rush to get any

and every resource they can find, they naturally lower the hiring bar.

Once they lower the hiring bar, the once brilliant and

industry recognized SE team starts hiring sub-standard material for that team.

Please be aware that the new hires are not bad or incompetent people. When I say "sub-standard" it doesn't mean that they are incompetent. All I am saying is that they are not the right fit for the SE team. They may

be pretty hard working but are ill-suited for the SE team which was until then

seeded by brilliant engineers who fully understood how to take very "developer" code and change it into a very "production ready" code.

Now, this is where it gets interesting. In the first phase,

we lost SRE team. In the second phase, we forced the SE team to become SRE and

SE team combined into one. In the third phase, we made the SE team to change

totally into SRE team. In the fourth phase, we started losing SE team. In the

fifth and final phase, we hired and seeded SE team with different level of people.

…here is the kicker, the SE team that started operating like

an operations team is at its lowest morale since the workload is totally

interrupt driven, they have by now lost the respect of their development team. At this point, development team pretty much agrees that SE team doesn't do any level of

investigation on any issue before chucking it over the fence to them.

…And the disease

spreads to Development team as well….

So now, this is perhaps that last stage, the development team changes

its workload from being totally plan driven workload to substantially interrupt driven workload. At this

stage, a development team that was entirely focused on new products, new

features is now forced to change its focus to part new features/product and

part sustenance of existing products or site up. A development team that was

able to bring to market at least two big products a year is now struggling to

bring even one big product in beta phase.

The slow development team frustrates their management since

their management is trying to catch up or stay ahead of competition. As a result,

they are continuously changing roadmap and plan of record.

In the past, the development team used to complete a big

product in 4-6 months, which used to allow their management to do course

corrections rapidly. Now, the course corrections have to happen at the same

pace as earlier but imagine that a development which is moving a very slow

pace, same team is now forced to absorbed course corrections while their beta

version is also not put out. This results in what development team largely sees as "scope creep" on the given project. This frustrates the developers and they understand this as “directionless”

development team management.

Now the maladies and issues of SRE and SE team have become

contagious and started hitting development team as well. Developers, frustrated

by their management’s constant change of direction, start leaving causing a

drain even bigger than the SRE and SE team attrition.

At this point the whole organization is paralyzed by these issues

and starts slowing down to the extent, that at some point, it comes to a

grinding halt. At this juncture, all the teams are focused on keeping the site up.

How do we rebuild

from here?

First thing we need to do is to baseline our team to estimate the "damage" - to ascertain how much our team's bias has changed.

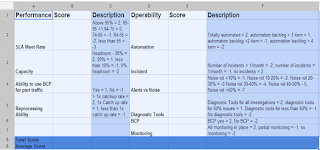

To measure where an SE or SRE or Development team stands, we can plot all the

dimensions required for a good team - SRE, SE (with engineering bias) or Dev team on X axis and then measure them on a scale of 0 through 10. Zero on the scale

shows total absence of the specific dimension and 10 shows reasonably high

proficiency in that dimension.

For the purpose of this article, I will focus only on Service Engineering team.

Let us also assume that hypothetically a very mature Service

Engineering team in the industry will perhaps be at 7.5 and above.

There is a distinct difference between Service Engineering

team as well as Service Reliability Engineering (SRE) team. Both are equally

important for a company. At the risk of being shouted down by many, I would say

that for a company, SRE team is more important than SE team. I draw the

metaphor of a hospital. Every hospital has an Emergency Room or ER. Some places

also call it Trauma center. This place receives people who are in dire need of

immediate first aid else they would die. They receive all the accidents, heart

attack, gun violence related people… They are America’s life line and the first

line of response. Without these wonderful folks we would have hundreds of

thousands of additional causalities in US every year.

Then there is a medical system that does more of diagnostic

and preventive medication. These are also the people for whom every day is a

“Monday” – they don’t have holidays,

These are the guys who save us daily.

Similarly, SRE team is our first line of defense. These are

the guys who receive the alerts and respond to them immediately. Depending on

how egregious an alert is, and how critical a property is, their response may

vary. For example, in case of a DoS attack, they may start and IRC, collaborate

with other companies having the same challenge and do everything to repel the

attack. Actually, discussion on DoS will need a separate book by itself. LOL!

SE team is the medical system that has much more time than the ER or SRE team and therefore, can focus on addressing the causes as opposed to just the symptoms.

Engineering-Operations

Graphs or EO-factor

I call these graph Engineering-Operations graph or “EO factor”

for short. This is like pH factor that determines if a solution is acidic or

basic. The pH factor of pure water is 7 and is considered neutral. pH factor

above 7 is considered alkaline or basic and as pH factor increases beyond 7,

the basicity or alkalinity of the solution increases. Similarly, as it goes

below 7, the acidity of solution increases.

EO factor should be read in the same way, as we move away

from the “Operations Line” the team becomes more biased and focused on a given

track – operations or engineering. As

you move north of this line, the team tends to become very engineering focused.

In the same vein, if you move south of this line, the team has more of operations

competence.

This graph can be used by any team with different

dimensions. For example, an SE or SRE team working on Big Data systems will

have somewhat different dimensions than a team working on Mail or a team

working on company landing home page. It is entirely up to the managers to

figure out correct dimensions to measure their teams and use those dimensions

to then chart out the future course.

|

| EO Factor |

Build the new team...

Once you have ascertained the baseline, take remedial steps to rebuild it - slowly and steadily.

Seed it with right skill set, keep the right workload type, see to it that it comes in right manner - interrupt or planned, keep irrigating and feeding it with right talent. And above all, watch it carefully as shown below.

Watch the team's focus...

Let us take a scenario where I have EO factor for a team and

the team exists for a long period. You are interested in seeing how that team

either stays in the fiber that you created it for or if, over a period, it has

changed its bias. A time series trending will be great graph to have for this

type of trending.

|

| EO Factor Trending |

This time series helps us to understand how our team is

trending over a period. Remember, no side is good or bad in this graph. You

want to keep a good watch on your team’s tendency to shift its bias from

Operations to Engineering or vice versa. Depending on what bias the team was

intended to be seeded with, you have very compelling motivation to keep the

bias in the same side. If you are not watchful and very mindful of this, the

teams always have tendencies to move from one focus to the other.

We should ensure that we seed an SE team so that it has an

EO factor of about 6 or above. We have also got to ensure that this EO factor always

stays above that for that SE team. Similarly, we need to ensure that our SRE

team has EO factor below 5 or 5.5 so it keeps operations as their bias.

An SRE team can be seeded with very brilliant engineering

focused people as well. The challenge is that most likely the brilliant people

do not like operations work and may again start rolling the same juggernaut

which led to the attrition of SRE in the first place.

Conclusion

We need to be always aware and cognizant of type of talent pool

in every team and make sure that right team is staffed for doing the right and

intended job. This has to be consistently and continually monitored else we get

to a point where we need to make radical changes. Making changes and having

those changes make impact is multi-year project and therefore, painfully

slow. Hence, a stitch in time may act as

a preventer for nine few years later.