The great thing about gap maps is that they can be used for comparing any entity. The entity can be products, sports teams, presidential candidates, tools, countries, choices…..anything at all.

The way you do it is to find two determinant attributes – two very distinguishable and principal attributes that define the set of entities. To explain this further, I take my favorite example – if I want to buy a new car, I could have mileage per gallon and MTBR as two determinant attributes. I could also have “smell of new car” as one of the determinant attribute. Though it is very endearing thing, the new car’s smell is certainly not very good attribute to go by, especially when you are putting tons of money down on a car.

Moral of the story – the gap maps are just a tool. How reliable it would be depends entirely on your choice of attributes. It is classic case of GIGO (Garbage in, garbage out) – if you chose the right attributes, you will get good gap map, bad choices will lead to bad gap map.

Why we need to get a good gap map? Simple reason is that a good map unravels so many stories about your product and similar products (read competition). A bad gap map may sweep under the rug many of the flaws and may lead you to believe that you have a winner product.

Now, this is one part of the story. Hold this strand somewhere in your L2 cache while I do a context switch.

Let me start another thread in this story and see if I can bring multiple the threads together to weave a single story, at some point in this blog.

We had gone live with a product earlier this year. It is one of our Data Systems pipeline that carries data (aka events) from hundreds of thousands of serving hosts back to our own “Deep Blue” backend system which then takes this petabytes of data and makes sense out of it.

Before we went live, we did something called Operational Readiness Certification or ORC. ORC is a long laundry list of hundreds of questions – some require subjective answers, some need numbers and yet others get Boolean type answers.

A good example of question asked in ORC is – “Do you have a BCP” – the answer is pretty Boolean – Yes or No. (Ok, there will always be the *cautious* types that will start their answer with “It depends…..” LOL!)

So this pipeline had passed ORC with flying colors and everyone was happy. However, when we started ramping up the volume, we found three conspicuous challenges on this pipeline:-

- Backlog Catch up Rate

- Reprocessing

- Data Discrepancies

Each of these three was causing us to throw tons of manual cycles at it with no light blinking at the end of tunnel. There was no ready metrics that we could take to our product and engineering partners and tell them where this product was in terms of production readiness and where we would want it to be.

It was a perplexing problem and luckily for us our Dev team is very brilliant who didn’t really need us to put lot of data behind these three issues before they would even pick them up for resolution.

So what was the problem statement? In a very high level, bulleted version, it would look something like this:-

- ORC is great but somewhat subjective and boolean

- ORC is also a matrix of blockers, failures, exceptions, action items. Once you are past those, nothing more comes out of ORC.

- After ORC is done, what is the next step? All products are at the same level.

- No way to classify/score maturity of a product compared to its peers

- No method of comparing different properties

- Comparison of similar systems could help

- ORC doesn’t allow time series trending

As I said above, we are lucky to have great developers at Yahoo and within a quarter, we almost solved all the three problems. However, lack of a good measurement method or absence of a tool that we could use to evaluate and compare our product with similar and very mature product in that space frustrated us.

Now let me bring in the third string in this story. I was reading a book on product management where the authors discuss gap maps and it dawned on me that if I have something similar, we could use it to compare different properties at Yahoo that we support.

Bringing all different strands together, I decided to look at gap maps, used for decades in the product management industry, to evaluate and compare our different data systems pipelines. The first and foremost challenge was to figure out correct determinant attributes. The challenge was that there are so many great attributes that could be used to peel this onion. I took a different approach and decided to create two uber attributes with any number of sub-attributes.

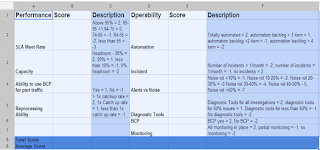

Sample attributes and sub-attributes

Performance and Operability were

the two overarching attributes and each had many sub-attributes.While selecting attributes and

sub-attributes, it should be kept in mind it is not necessary that different

categories of properties have similar attributes. For example, a property like

Yahoo Frontpage or Facebook main landing page may have different attributes

than a backend data warehouse system. Decide your attributes and sub-attributes

carefully and diligently. This will be time well invested upfront in the whole

exercise.Once the sub-attributes have been

chosen, you could use a simple spreadsheet to compute the values for the

sub-attributes and aggregate them to arrive at a value for the parent attribute.

Please see the figure below. I have also given some guidance for scoring them,

but you should create your own guidance.

Scoring spreadsheet sample

The point to keep in mind is that

this guidance should not change from property to property (or product to

product) in the same category. So if you are comparing different data warehouse

systems or massive analytic systems, the guidance should remain the same.

However, like I said above, different categories may have different attributes,

some similar some totally dissimilar and there guidance for scoring may also be

vastly different from the above scoring model.

Once you get the values from this

type of spreadsheet for main two determinant attributes, use those values to

plot on the simple X,Y Axes graphs.

The names of the product are

somewhat fictitious and so is the sample data (I could use the disclaimer “The

characters and story in this movie are fictional and any resemblance to people,

living or dead, is merely coincidental…” J)

Sample Scores for POM

Once you have the values for X and Y Axes,

plotting of the graphs is fairly simple.

POM Graph

Now that you have the graph which

gives where different similar products fall, you may ask the question, now

what?

Well, to start with, you

(developers, Service engineers, product managers, managers – in short all connected

with the product) can understand where your product is compared to its peers.

The first endeavor should be to get

your product to the first quadrant (positive quadrant). Once it is in the

positive quadrant, the next endeavor should be to continually move it to in the

north east direction.

It also gives you an understanding

why EDW needs tons of manpower to support it and why CMS data warehouse needs

half an FTE (full time employee) to support it.

Further, EDW folks can talk to CMS

folks to get a handle on what are the different things CMS team did to get to

where they are.

Finally, if EDW team starts working

on the betterment of the product, they can use two snapshot of this graph – the

first one in the present time and the next one a quarter or two later to

evaluate progress (hopefully) that the product has made on the two determinant

attributes.

Love to get feedback.

No comments:

Post a Comment